The following is a guest article written by Owen Burton for and originally posted in Visitor Focus on the Association of Independent Museums website.

Working in digital heritage is exciting: as technology improves all the time, the possibilities of what can be achieved continue to grow. Here AIM Associate Supplier Experience Heritage explore some of the best methods for heritage sites to produce engaging displays while managing the impact of social distancing.

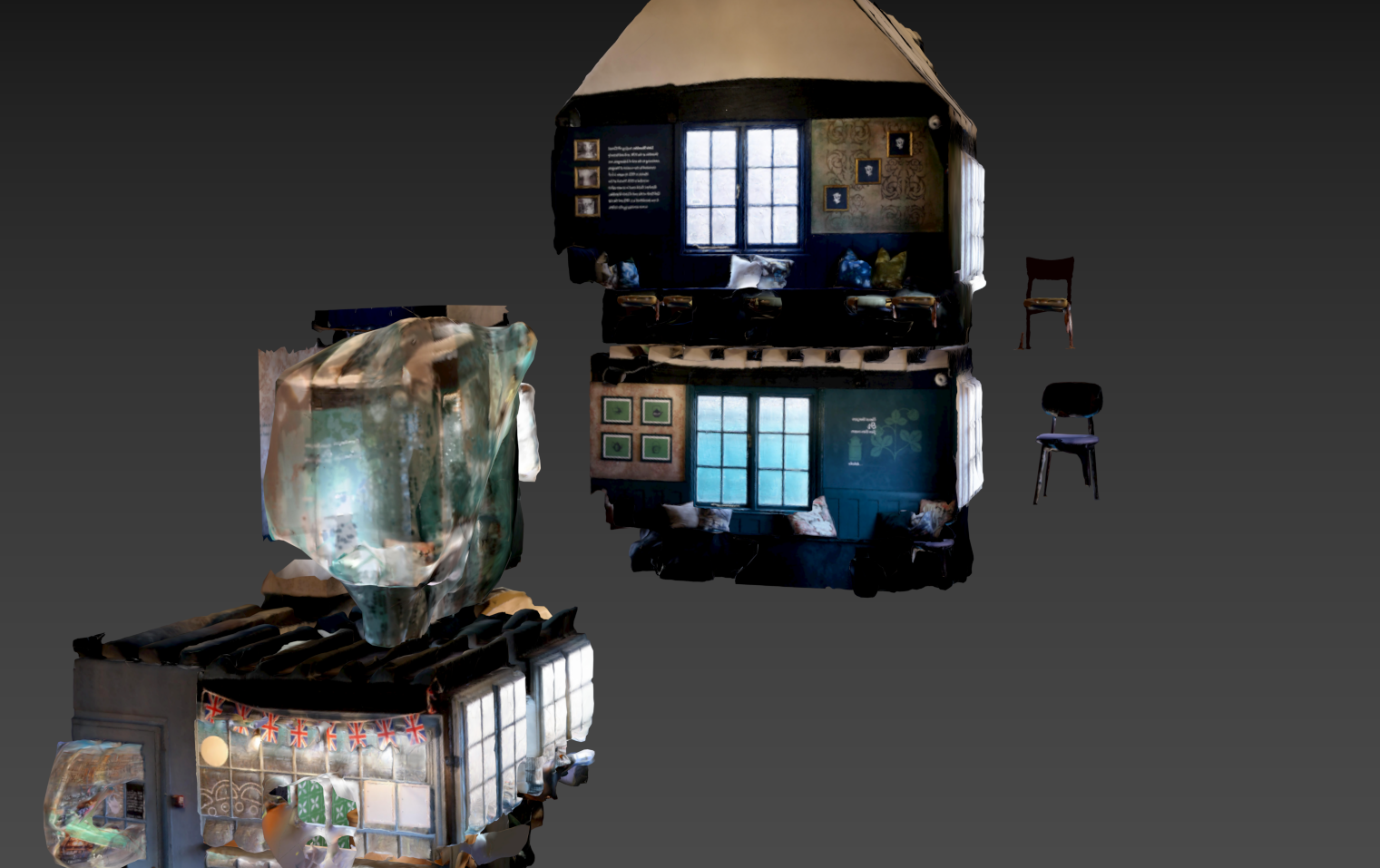

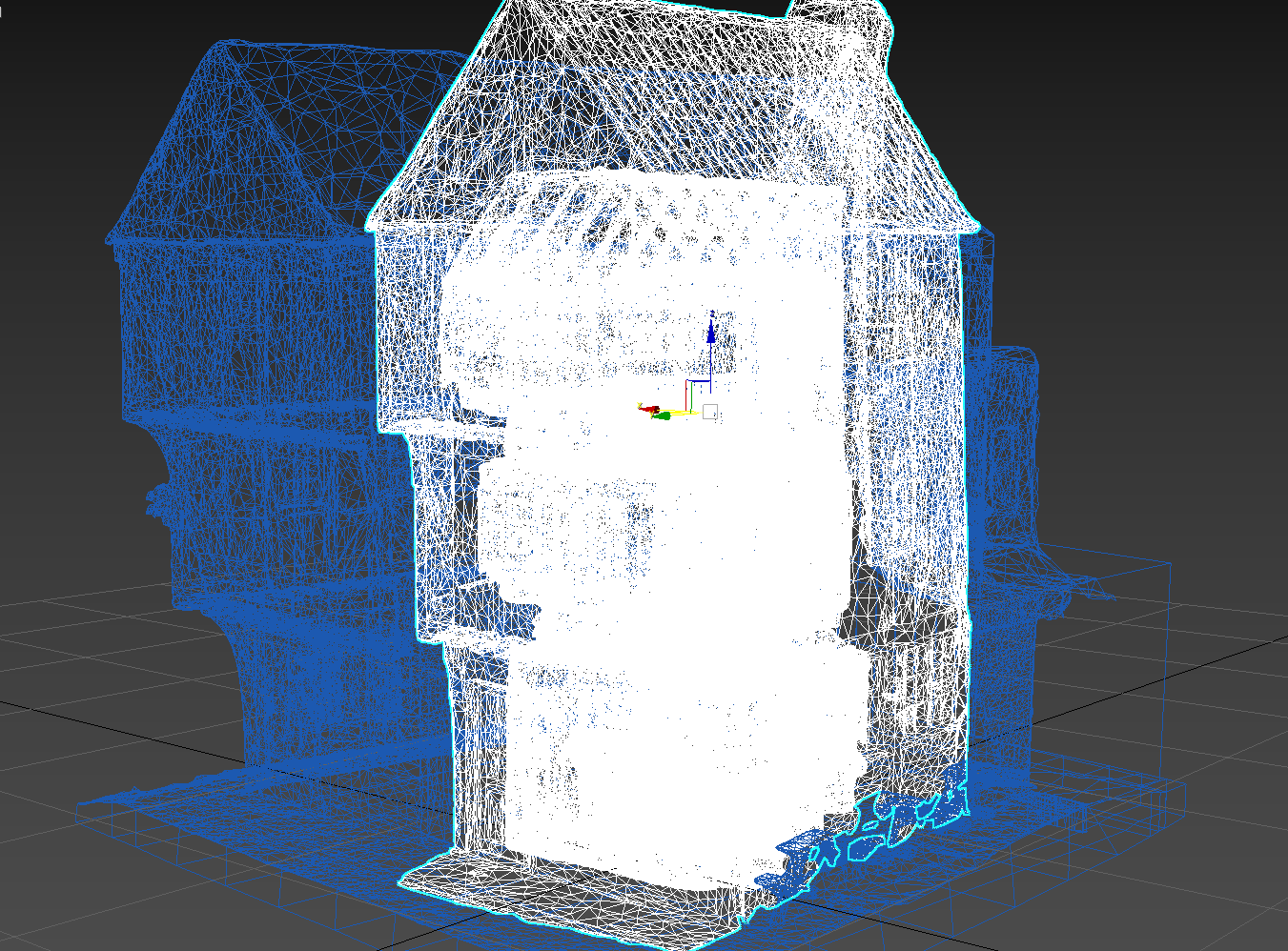

Possibilities with Photogrammetry and Augmented Reality

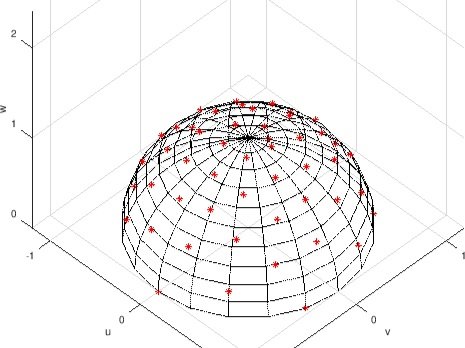

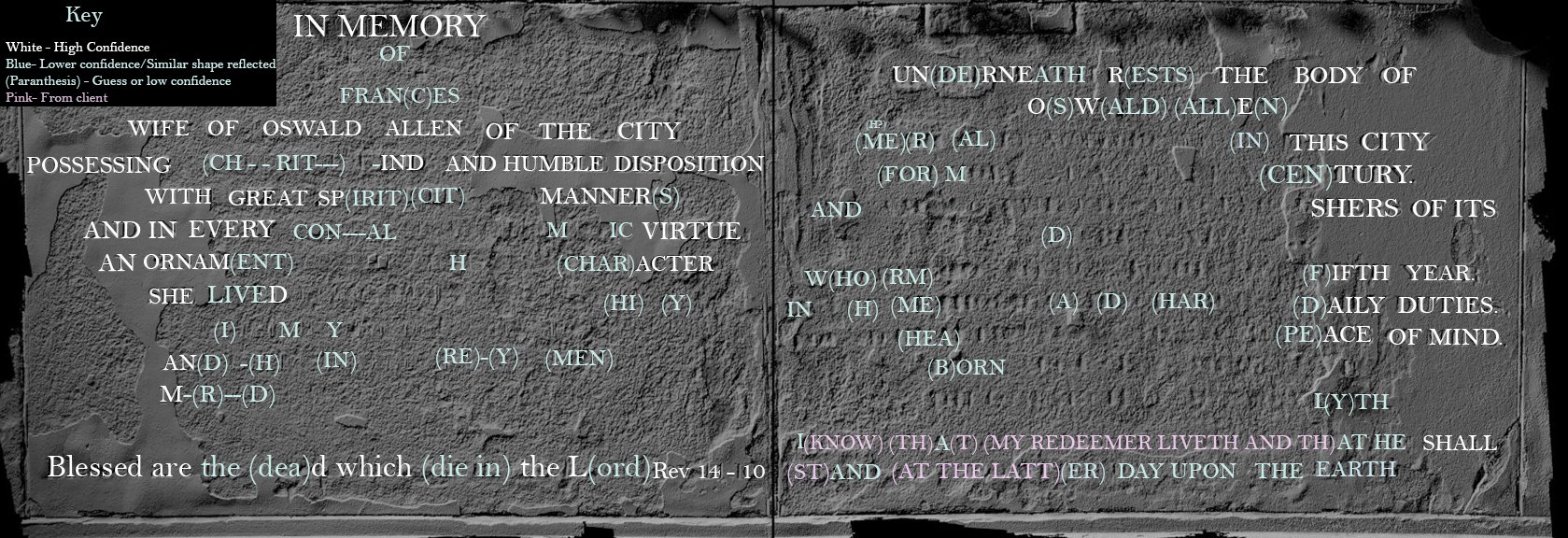

Photogrammetry (creating a 3D model by stringing together a group of photographs) makes it possible for heritage sites to have pieces of their collection accessible online. People can interact with these objects in a new way and from different angles, all from the comfort of their own living room. In these challenging times, it might be possible for heritage site staff to be taught how to take the required photos of a given object or even how to use the modelling software for themselves.

Augmented Reality mobile apps can place historic reconstructions of sites over the current landscape to enable the public to visualise what used to be there, providing opportunities for storytelling to help bring inaccessible sites to life.

Mobile apps and virtual tours

There is an increasing awareness of the possibilities of heritage trail and self-guided tour mobile apps another opportunity for interactive engagement with history while maintaining social distancing. Virtual tours have allowed digital access to sites that have been closed during lockdown, as people have been virtually wandering around such sites as the British Museum, the Louvre and the Van Gogh Museum.

Pause for thought . . . and communication

Lockdown has provided us with opportunities to learn and space to reflect. Heritage roundtables and webinars have lent greater clarity to the day-to-day realities of what sites have been going through and what their focus points are. These priorities have included expanding audience engagement through digital opportunities and ensuring accessibility in digital communication for disabilities.

If you would like to explore possibilities for digital engagement, like photogrammetry, augmented reality or heritage trail apps, Experience Heritage would love to help. Visit our website at www.experience-heritage.com or email us at info@experience-heritage.com.

Pictured: Mockup imagining of an AR app for Slingsby Castle by Experience Heritage.